Hyena Hierarchy: Towards Larger Convolutional Language Models

Attention is great – it powers many of the most exciting AI models, from ChatGPT to Stable Diffusion. But is it the only way to reach scale or unlock amazing capabilities such as in-context learning? This seems like a fundamental question for AI. Are these abilities rare or all around us? We started this work because we just want to know!

Hyena cubs are born with their eyes open and can walk within minutes! But also, Hyena is a new operator for large language models that uses long convolutions and gating, reaching attention quality with lower time complexity.

Pushing Sequence Lengths to the Limit

More practically, while Attention is amazing, it does have some drawbacks. Attention is fundamentally a quadratic operation, as it compares each pair of points in a sequence. This quadratic runtime has limited the amount of context that our models can take. In the approaches we’ll discuss below, we started to think about models that can handle sequences with millions of tokens–orders of magnitude longer than what Transformers can process today. (e.g, imagine feeding ChatGPT a whole textbook as context and reasoning about it in a zero-shot setting, or having as context every keystroke you’ve ever written, or conditioning a patient’s entire health record. Sounds amazing!) Below, we’ll talk about one path (potentially of many) that might get us there, and it is based on ideas from signal processing.

Over the past few years, there has been exciting progress on models for long sequential data, such as time series, audio and more recently language (Gu, Goel, and Ré 2021; Dao et al. 2022). These domains are notoriously challenging for attention, since your typical Transformers are trained to process sequences of at most 8k elements - although some adventurous researchers and developers are beginning to train models with context lengths of 32k or even 64k, thanks to recent advances in efficiency and memory scaling (Dao et al. 2022).

We’re excited to share our latest work on Hyena, a subquadratic-time layer that has the potential to significantly increase context length in sequence models. This work would not have been possible without remarkable advances in long sequence models (Bo 2021; Dao et al. 2022), alternative parameterizations for long convolutions (Gu, Goel, and Ré 2021; Romero et al. 2021; Li et al. 2022), and inspiring research on mechanistic understanding of Transformers (Olsson et al. 2022; Power et al. 2022; Nanda et al. 2023).

Hyena: a subquadratic-time attention alternative for long sequences.

Mechanistic Design to the Rescue

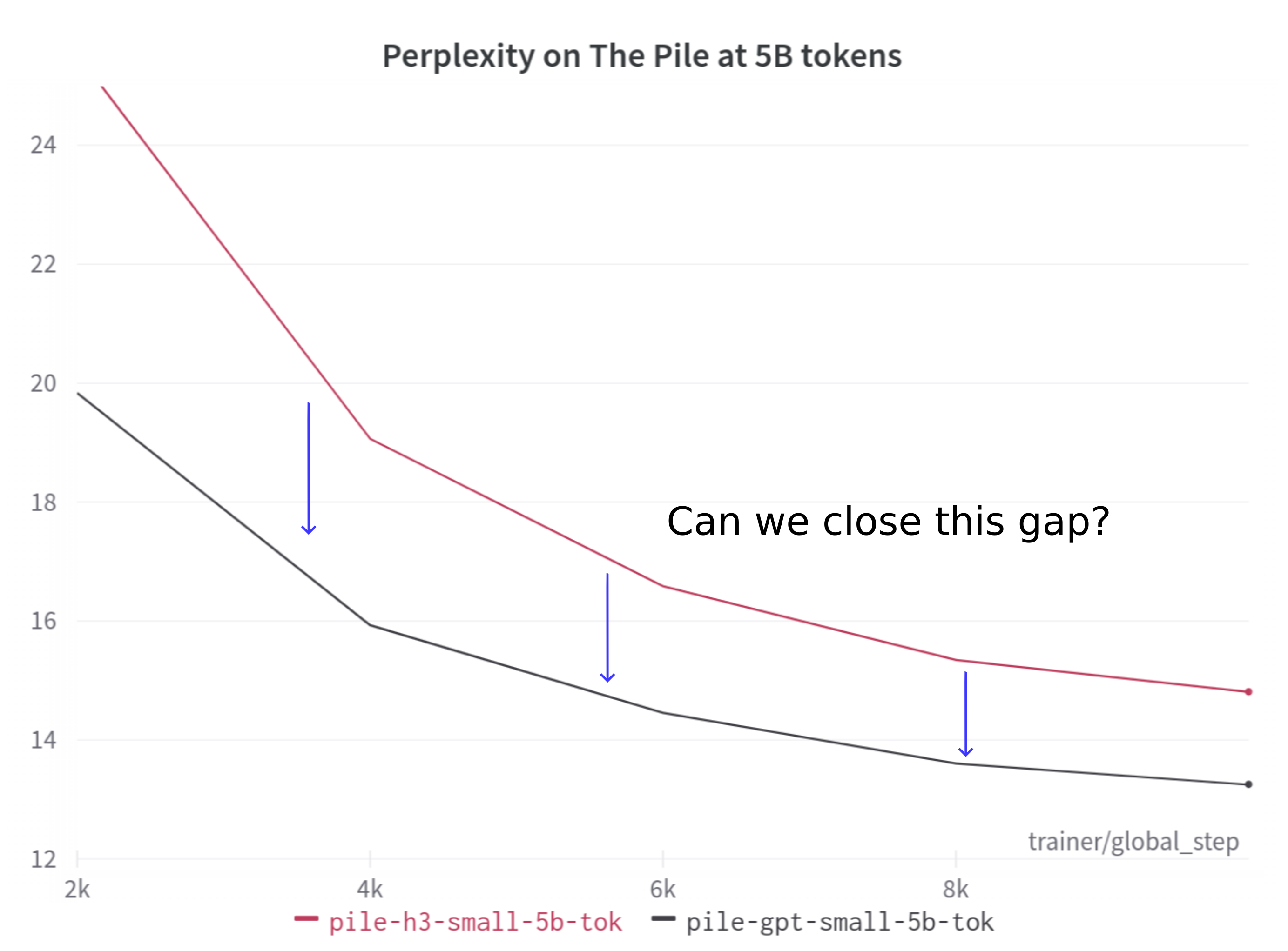

Our starting point is the rich literature on attention-free, subquadratic-time architectures. Recent developments in state-space models for language modeling have shown us that with just a little bit of attention - at most a few layers - we can match the quality of fully-attentional models up to the 2.7 billion parameter scale. However, fully removing attention from these new models (and training with the same tokenizer!) reveals a gap:

These last few attention layers have been bothering us. Is there computation required by our large-language models that is fundamentally quadratic (i.e., involving all possible pairwise comparisons)? At this point, one may begin to wonder if attempts to find any effective alternative to attention are doomed from the start. A variety of attention replacements have been proposed over the last few years, and it remains challenging to evaluate the quality of a new architecture during the exploratory phase.

We found our breakthrough by scaling down the problem. Based on the groundwork done by H3 on using synthetic tasks for model design, we put together a collection of string manipulation and in-context learning tasks that take at most a few minutes to run on common hardware configurations, and used them to search for quality gaps between architectures.

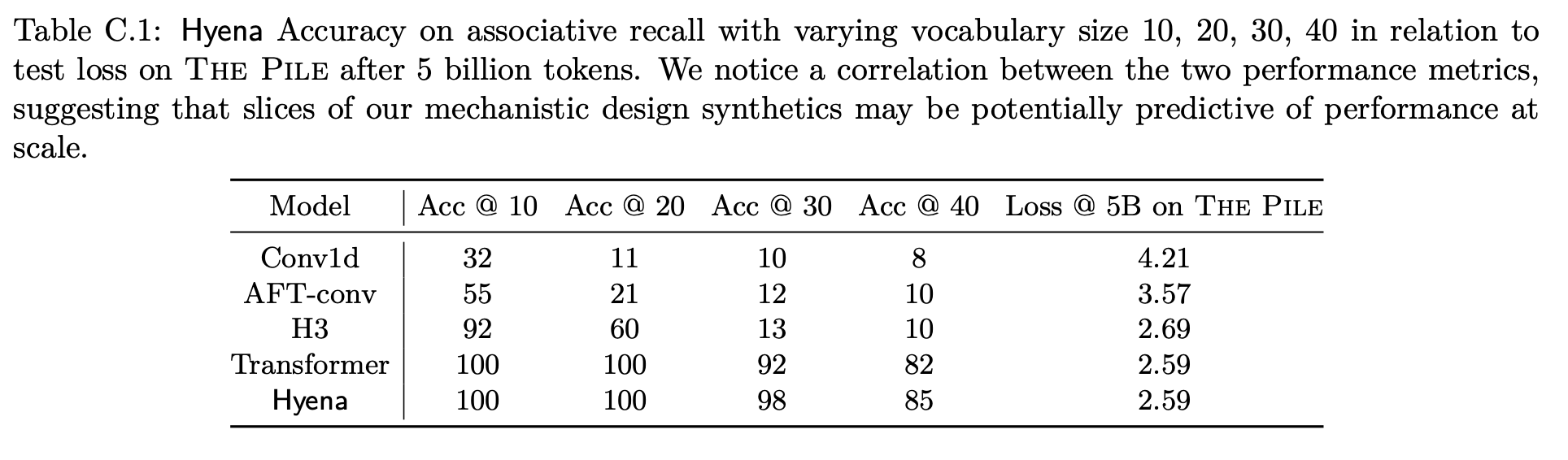

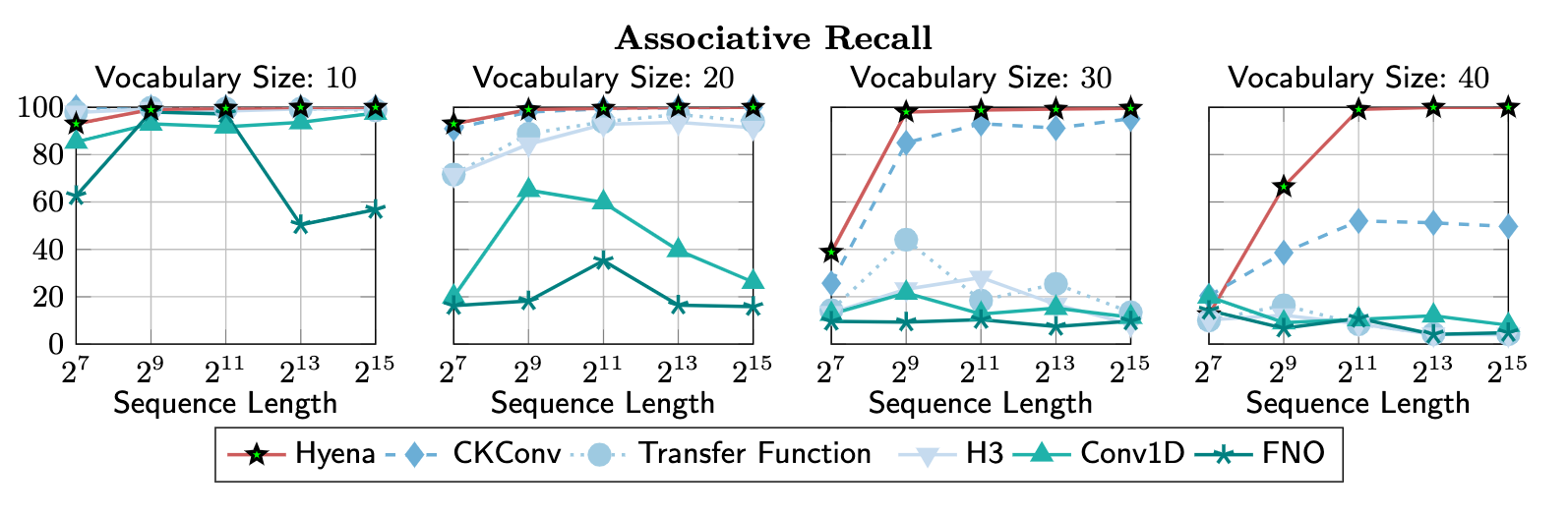

For example, by measuring how well models are able to perform associative recall in increasingly challenging settings (larger vocabularies and longer sequences), we see some correlation with pretraining loss on The Pile:

Although a variety of layers are capable of learning to recall and count (including staples such as RNNs!), we were surprised to see these performance differences mirror results found at different scales. The natural question was then: how do we shrink these gaps further?

Attention, Data-Control and Hyenas

One remarkable property of the attention layer is that it is data-controlled (or data-dependent):

\[y = A(x)x\]The matrix $A$ is formed on the fly, constructed as a particular function of the input. After training a variety of attention-based models, it becomes apparent that without data-control, any kind of in-context learning becomes much more challenging.

Could we strengthen the data-control path in our attention-free layers? That is, is there a way can we ensure different inputs can steer our layer to perform substantially different computation?

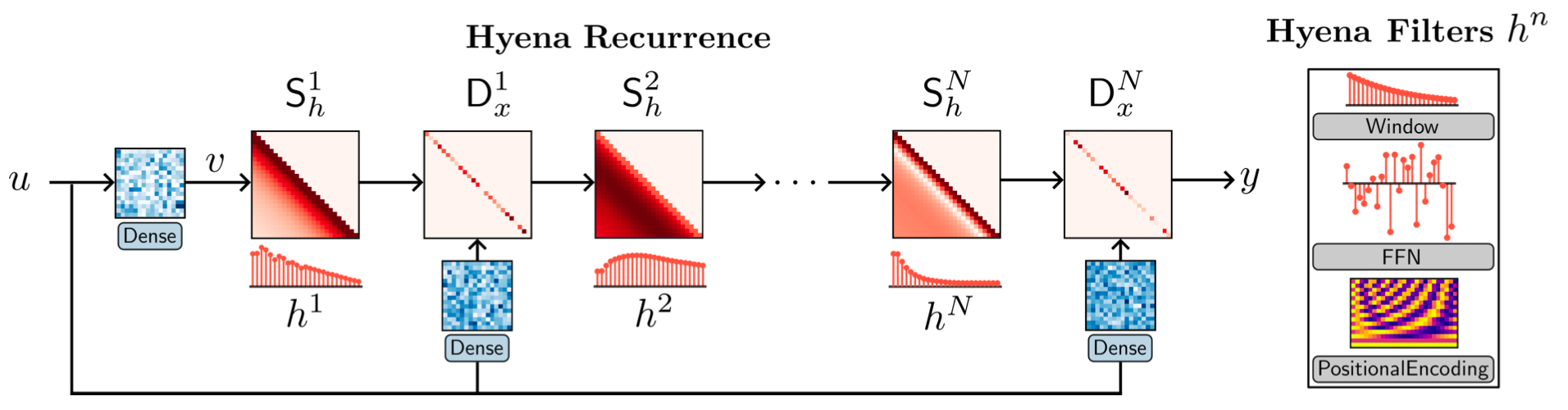

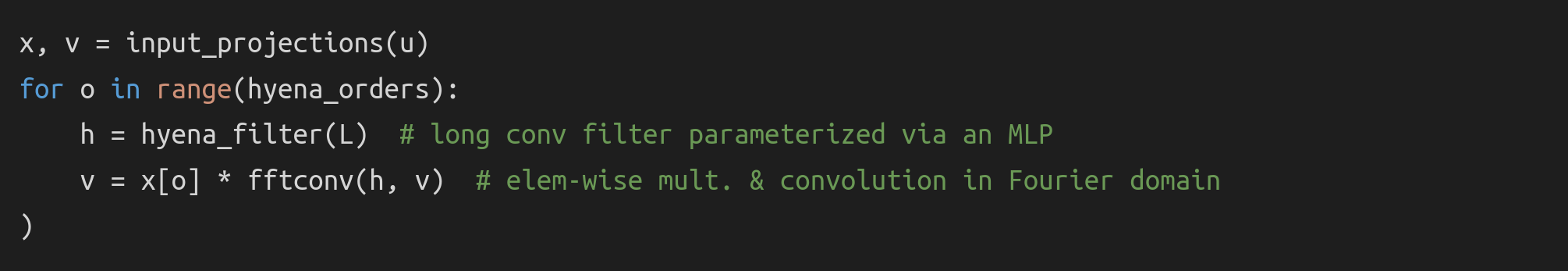

Enter Hyena:

With Hyena, we define a different data-controlled linear operator that does not compute \(A(x)\) explicitly, but instead defines an implicit decomposition into a sequence of matrices (evaluated via the for-loop above). And some exciting news for signal processing fans: we find filter parametrization (and custom input projections) to be one of the most impactful design choices, and come up with some recommendations. More on this soon!

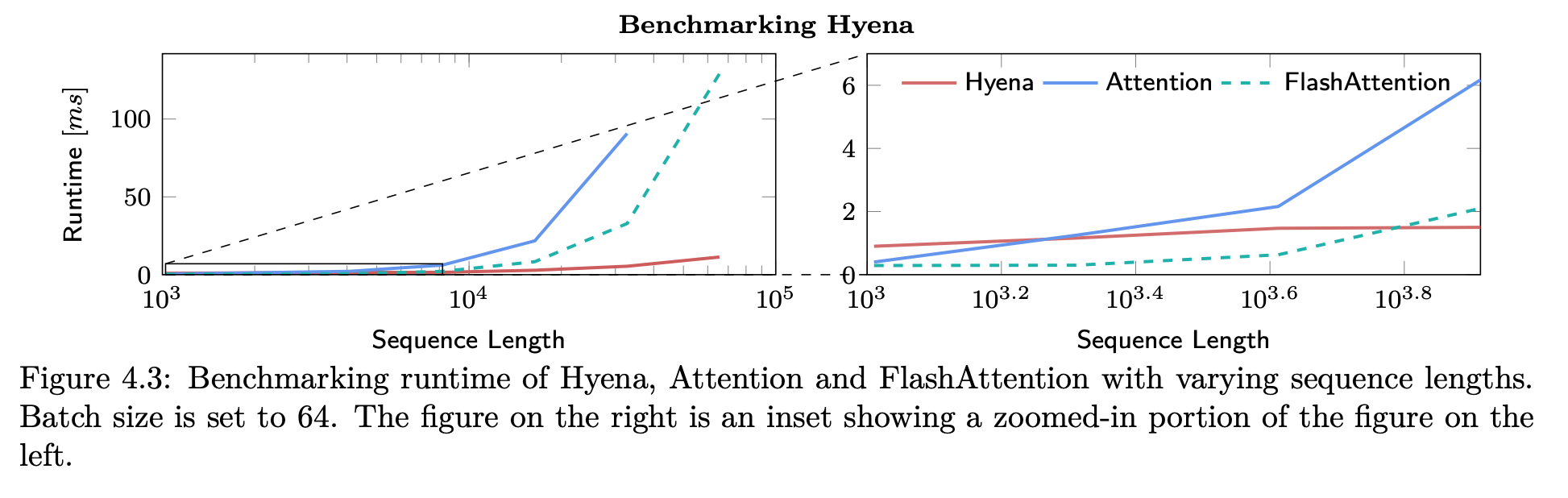

How about speed? Every step can be evaluated in subquadratic-time, and we only need very few “orders” (at most 3) in our experiments. The crossover point between highly optimized FlashAttention and Hyena is at about 6k sequence length. At 100k sequence length, Hyenas are 100x faster!

With a way to measure progress on our small mechanistic design benchmarks, we refine the design of Hyena, and observe that particular parametrizations of the long convolutions scale more favorably in sequence length and vocabulary size, especially when paired with shorter explicit filters:

This should not be too surprising – we know neural networks to be more expressive than many other function classes, and the same observation holds for parametrizations of convolutional filters. For example, neural fields [CIT] are now widely adopted in many graphics and compression applications due to their ability to accurately fit many signals.

Hyenas on Language

We set out to evaluate Hyenas on a variety of tasks, including language modeling at scale (on Wikitext103, The Pile, PG-19), and downstream tasks such as SuperGLUE.

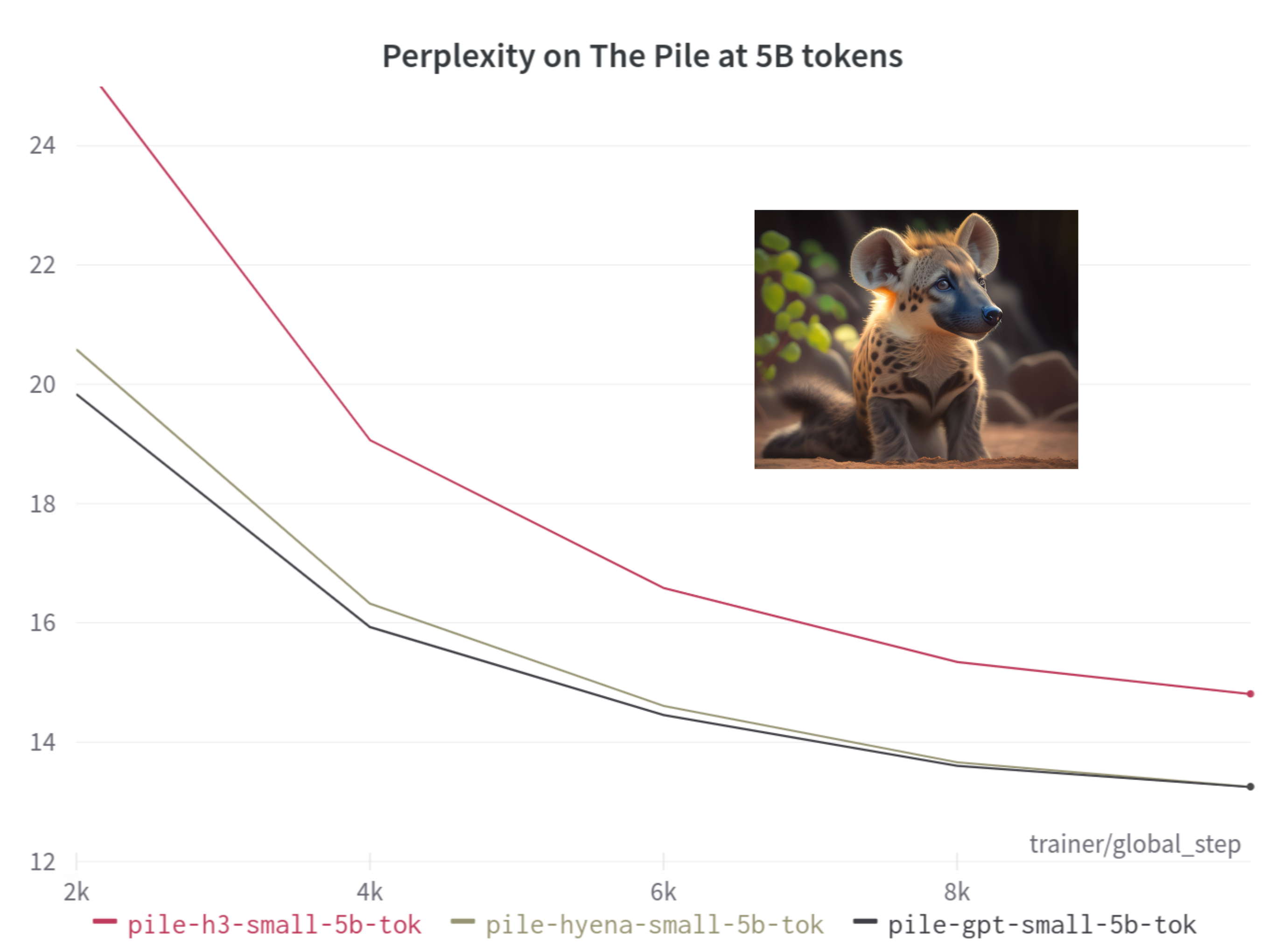

On The Pile, we see the performance gaps with Transformers start to close, given a fixed FLOP budget. (Hyenas are crafty creatures: they don’t leave perplexity points on the table!)

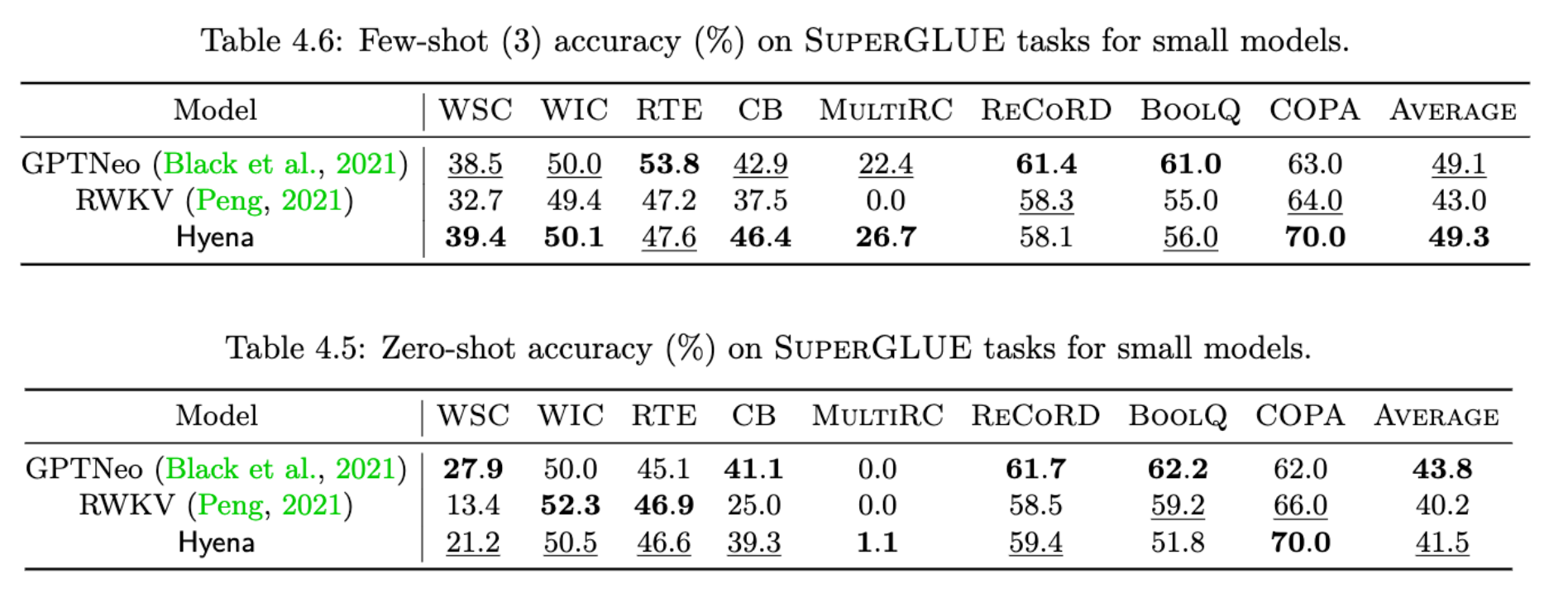

With larger models, we reach similar perplexity as a regular GPT architecture with a smaller FLOP budget. On the standard long-range language benchmark PG-19, we train Hyena with 16k context and reach a perplexity 14.6. We also perform downstream evaluations on several zero and few-shot tasks. (Turns out Hyenas are pretty good few-shot learners too!)

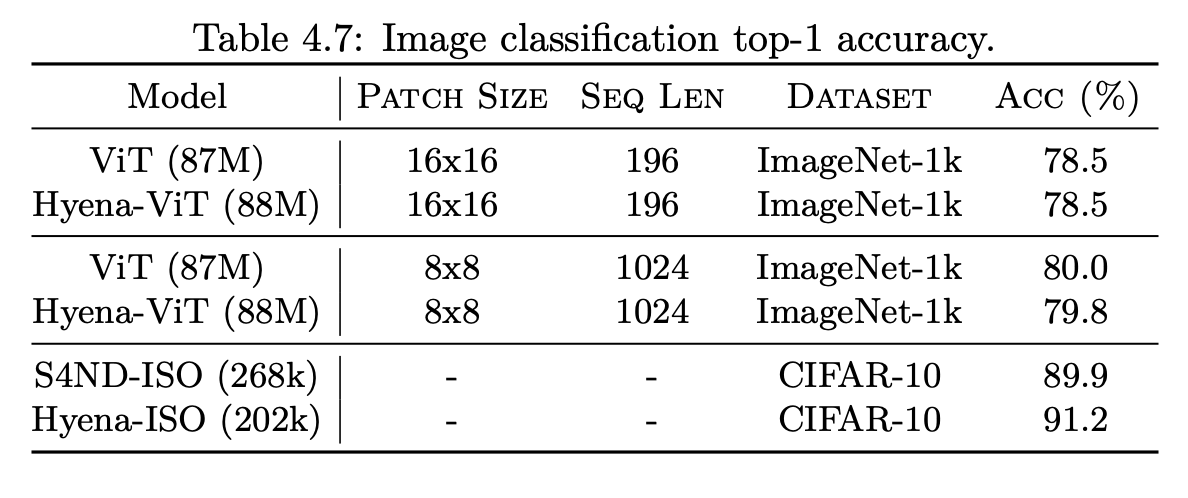

Similarly to attention, Hyena can be used in Vision Transformers. Hyena matches Transformer performance on ImageNet, suggesting that mechanistic design benchmarks may be informative of performance beyond language – perhaps in many domains where attention “simply works”?

What’s Next

With Hyena, we’re just scratching the surface of what can be achieved with models grounded in signal processing and systems principles, designed by iterating on a curated set of synthetic tasks. We’re excited to push training to even longer sequence lengths (1 million?), test on new domains, and accelerate inference by switching to a recurrent view – in the spirit of the state passing method employed by H3, or the RWKV recurrence. With longer context models, we hope to accelerate the next phase of breakthroughs in deep learning!

We’d love to hear from you about exciting applications and challenges that could benefit from long sequences models.

Michael Poli: poli@stanford.edu; Stefano Massaroli: stefano.massaroli@mila.quebec; Eric Nguyen: etnguyen@stanford.edu

Acknowledgements

Thanks to all the readers who provided feedback on this post and release: Karan Goel, Albert Gu, Avanika Narayan, Khaled Saab, Michael Zhang, Elliot Epstein, Winnie Xu and Sabri Eyuboglu.

References

- Gu, Albert, Karan Goel, and Christopher Ré. 2021. “Efficiently Modeling Long Sequences with Structured State Spaces.”

- Dao, Tri, Daniel Y Fu, Khaled K Saab, Armin W Thomas, Atri Rudra, and Christopher Ré. 2022. “Hungry Hungry Hippos: Towards Language Modeling with State Space Models.”

- Dao, Tri, Daniel Y Fu, Stefano Ermon, Atri Rudra, and Christopher Ré. 2022. “Flashattention: Fast and Memory-Efficient Exact Attention with Io-Awareness.”

- Bo, PENG. 2021. “BlinkDL/RWKV-LM: 0.01.” Zenodo. https://doi.org/10.5281/zenodo.5196577.

- Romero, David W, Anna Kuzina, Erik J Bekkers, Jakub M Tomczak, and Mark Hoogendoorn. 2021. “Ckconv: Continuous Kernel Convolution for Sequential Data.”

- Li, Yuhong, Tianle Cai, Yi Zhang, Deming Chen, and Debadeepta Dey. 2022. “What Makes Convolutional Models Great on Long Sequence Modeling?”

- Olsson, Catherine, Nelson Elhage, Neel Nanda, Nicholas Joseph, Nova DasSarma, Tom Henighan, Ben Mann, et al. 2022. “In-Context Learning and Induction Heads.”

- Power, Alethea, Yuri Burda, Harri Edwards, Igor Babuschkin, and Vedant Misra. 2022. “Grokking: Generalization beyond Overfitting on Small Algorithmic Datasets.”

- Nanda, Neel, Lawrence Chan, Tom Liberum, Jess Smith, and Jacob Steinhardt. 2023. “Progress Measures for Grokking via Mechanistic Interpretability.”